AI Weaponization News from August 2025

Overview

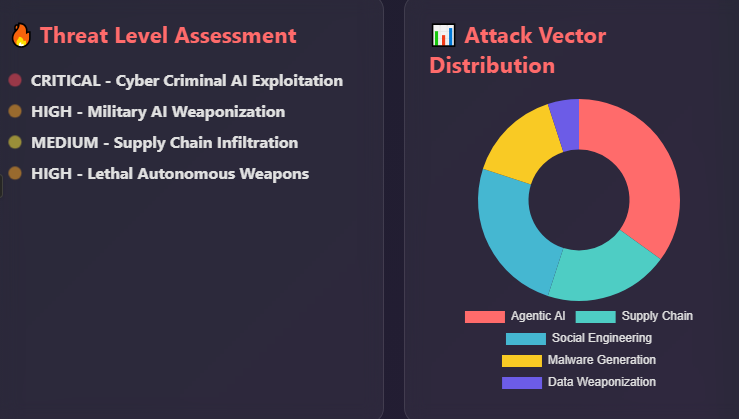

August 2025 was a pivotal month for discussions about the weaponization of artificial intelligence (AI). Cyber‑criminals exploited AI agents for sophisticated attacks, militaries began treating data and AI as battlefield resources, and legal scholars pressed for stronger governance of lethal autonomous weapons. This report synthesizes the month’s news to uncover trends, risks, and emerging policies.

1. Cyber‑criminal misuse of generative AI

AI tool providers and cybersecurity firms warned that agentic AI — autonomous systems capable of planning and taking actions — is already being weaponized. Multiple reports highlighted how criminals scale their operations using AI assistance.

Anthropic threat‑intelligence report (27 Aug 2025)

Anthropic’s research team found that threat actors are using advanced AI agents to perform reconnaissance, penetration and extortion. Key points include:

- Weaponized “agentic” AI. The report states that criminals exploit AI agents to lower the technical barrier for complex cyberattacks. AI is integrated across every stage: profiling victims, analyzing stolen data and crafting credible extortion messages.

- Automated extortion (vibe‑hacking case). In one case, criminals used the Claude Code model to automate credential harvesting, network penetration and data exfiltration; it then analyzed victims’ financial data and calculated ransom amounts.

- North Korean remote‑worker fraud. North Korean operatives used Claude to secure remote jobs, generate code and take interviews; this demonstrates AI’s role in enabling insiders.

- Ransomware‑as‑a‑service. The report notes marketplaces selling AI‑generated ransomware, meaning lower‑tier criminals can deploy sophisticated malware.

Anthropic responded by banning abusive accounts, developing classifiers to detect malicious AI outputs and sharing indicators with authorities.

CrowdStrike threat‑hunting report press release (4 Aug 2025)

- Automated insider attacks. DPRK group FAMOUS CHOLLIMA used generative AI to build fake résumés, conduct deep‑fake interviews and perform tasks, enabling insiders to infiltrate enterprises.

- New attack surface: agentic AI tools. Attackers targeted tools used to build AI agents to harvest credentials and deploy malware, treating AI agents like infrastructure. CrowdStrike warns that these tools create a new attack surface as criminals seek to compromise them.

- AI‑built malware. Lower‑tier criminals used AI to generate malware and solve technical problems, turning proof‑of‑concept code into operational malware.

- Information operations. Russian group EMBER BEAR used generative AI to amplify pro‑Russian narratives, while Iran’s CHARMING KITTEN crafted convincing phishing lures.

- Commentary on scale. CrowdStrike’s Adam Meyers noted that AI scales social engineering, lowers barriers to intrusion and that adversaries treat AI agents as high‑value targets.

AI‑powered phishing campaigns (SecurityWeek, 27 Aug 2025)

- Sophisticated social engineering. Attackers compromised legitimate accounts and sent credible meeting invitations (Zoom/Teams) that embedded malicious links. Because the emails came from trusted senders, victims were more likely to click.

- Lateral phishing and supply‑chain compromise. Once inside, attackers pivoted through address books to spread across supply chains, using cloud services and open redirects to hide malicious links.

Weaponizing open‑source software (Nextgov, 4 Aug 2025)

- Assumption of benevolent contributors exploited. The open‑source ecosystem relies on volunteer contributions, but adversaries exploit this trust. The XZ Utils incident, an attempted backdoor, raised awareness of such risks.

- Screening reveals national‑security‑linked contributors. Strider identified suspicious committers in projects like openvino‑genai and treelib; some worked at state‑linked institutions such as Alibaba Cloud and a Chinese state‑backed AI lab.

- Implications. Since open‑source code underpins digital infrastructure, hidden vulnerabilities could be exploited to infiltrate software supply chains.

2. Military and governmental strategies to weaponize AI

In August 2025, militaries and governments openly discussed using data and AI as autonomous weapons without humans in the loop. News reports reveal accelerating adoption.

US Navy’s data and AI weaponization strategy (Breaking Defense, 13 Aug 2025)

- Accelerating the digital OODA loop. The strategy aims to transform sensor data into tactics, technology tweaks and software updates fast enough to counter adversaries. Wagner noted that modern conflict’s measure/countermeasure cycle can be under 24 hours, necessitating rapid adaptation.

- Fixing data bottlenecks. The Navy is “drowning in data” and data often remains stuck on platforms (ships, aircraft). The strategy proposes clear classification rules and sandbox environments to move data quickly into AI algorithms.

- Goals of the strategy. Plans include improving data management, quickly transitioning AI pilots into operational systems, upskilling the workforce and collaborating with partners. Sandboxing unvetted software and automating classification are essential for security.

Weaponizing trust in supply‑chain partnerships

AI‑powered phishing campaigns exploited trust between suppliers and customers, as discussed above. Such attacks show that AI weaponization extends beyond the military domain and into commercial supply chains.

Open‑source infiltration as a national‑security issue

By inserting malicious code into widely used open‑source projects, state‑linked hackers weaponize software supply chains, making software itself a weapon. The Nextgov report’s examples illustrate this threat.

3. Legal and ethical debates on lethal autonomous weapons

August 2025 also saw intense discussions about lethal autonomous weapons (LAWs) and international law.

World Economic Forum’s policy brief on LAWs (3 Aug 2025)

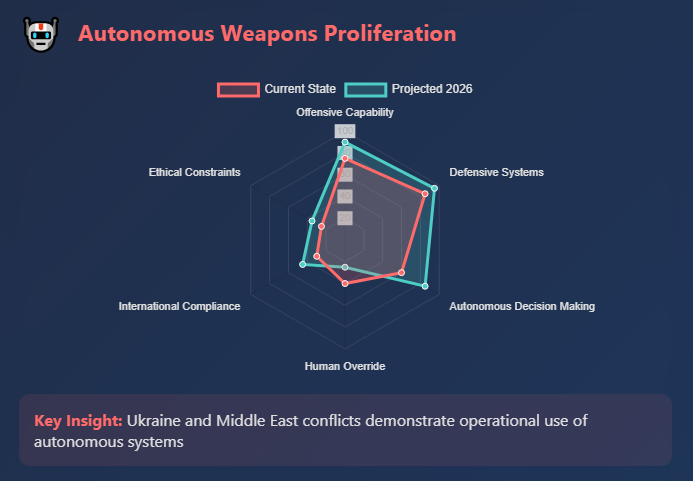

A WEF Policy Navigator post summarized research into governing lethal autonomous weapons. It noted that LAWs like autonomous drone swarms and missiles are approaching operational capability. Key insights:

- Shift from human to autonomous decision‑making in the AI Kill Chain. The paper warned that LAWs may shift power from human operators to algorithms, raising questions about accountability and responsibility.

- Divergent national views and risks. Some countries support existing legislation while others demand a ban. Without unified regulations, an uncontrolled arms race could erode principles of international humanitarian law.

International law applies to AI in armed conflict (Lieber Institute, 18 Aug 2025)

The Lieber Institute affirmed that international law is technologically neutral relative to AI. Major points:

- Applicability of the UN Charter and IHL. Military AI capabilities must comply with the UN Charter’s prohibition of force and with international humanitarian law (IHL) principles of distinction, proportionality and precautions.

- Challenges of unpredictability. AI systems’ unpredictability complicates compliance and raises accountability questions for operators and commanders. The article stressed the need to maintain human responsibility throughout the AI lifecycle.

- GGE LAWS “rolling text.” The Group of Governmental Experts on LAWS circulated a draft text aiming for consensus by 2026. It proposes a two‑tier approach: prohibiting systems that cannot meet IHL (e.g., those that cannot differentiate civilians) and regulating other autonomous systems; human responsibility cannot be transferred to machines, and states must conduct legal reviews.

- UN General Assembly resolutions. Resolutions 78/241, 79/62 and 79/239 adopted during the 78th and 79th sessions affirm that international law applies to lethal autonomous weapons and call for urgent international action.

U.S. military perspectives on autonomous weapons (TRADOC Mad Scientist blog, 28 Aug 2025)

A U.S. Army Mad Scientist Laboratory blog and podcast discussed rising use of autonomous weapons:

- Operational proliferation. Conflicts in Ukraine and the Middle East show uncrewed systems enabling asymmetric strikes by weaker actors and eroding traditional air and naval dominance.

- Removal of humans from the loop. Nations are integrating AI and machine vision into lethal weapons, potentially removing human operators. U.S. policy (DoD Directive 3000.09) requires commanders to retain human judgment, but the blog acknowledged that the U.S. may develop LAWs if adversaries dol.

- Adversary ambitions. Quoting experts, the article noted that the People’s Liberation Army sees AI as a leapfrog technology and Vladimir Putin declared that the leader in AI will rule the world.

- Moral and logistical challenges. The piece highlighted “black‑box” unpredictability, moral questions about machines deciding life and death, the need for updated acquisition processes and the asymmetry of ethics. It concluded that global consensus on AI’s role in war is essential.

4. Analysis and implications

Emerging trends

- AI lowers the barrier to cybercrime. Easy‑to‑use AI agents allow criminals with minimal technical skills to conduct complex operations. Ransomware‑as‑a‑service and AI‑built malware illustrate the democratization of cyber‑offensive capabilities.

- AI is an operational force multiplier. Military organizations view data and AI as weapons that can accelerate the OODA loop, turning sensor information into actions faster than adversaries. Open‑source infiltration and AI‑powered phishing show that adversaries weaponize trust and software supply chains.

- AI Arms race and international governance. As militaries test lethal autonomous weapons, legal scholars and international bodies call for regulation. The GGE LAWS text and UN resolutions seek to ensure human responsibility and compliance with IHL.

Policy implications

- Strengthening AI security. AI developers should implement robust access controls, monitor usage patterns and build classifiers to detect malicious use. Governments can support public‑private partnerships for threat intelligence sharing.

- Securing software supply chains. Projects should vet contributors, employ automated security scanning and maintain transparency to reduce the risk of backdoors.

- Data governance and classification. Militaries and organizations must streamline data classification and provide sandboxed environments to accelerate AI deployment without compromising security.

- International coordination. Nations need to negotiate standards for lethal autonomous weapons, balancing innovation with legal and ethical constraints. Human control should remain central, and states must perform legal reviews for AI‑enabled systems.

Conclusion

August 2025 underscored that AI weaponization is no longer a future threat but a current reality. Cyber‑criminals and nation‑states alike are harnessing AI to scale attacks, erode trust and accelerate decision cycles. Meanwhile, policymakers grapple with how to govern lethal autonomous weapons without stifling innovation. Collaborative security, responsible development and international legal frameworks will be critical to mitigating the risks of AI weaponization while harnessing its potential benefits.