1. Introduction — A Quiet Architect of Algorithmic War

Palantir military AI isn’t firing missiles or piloting drones. But it may be deciding who gets seen, who gets flagged, and who ultimately gets targeted.

While the spotlight has focused on lethal hardware—autonomous drones, robotic tanks, AI dogfights—the most transformative shift in modern warfare is unfolding behind the screen. In conflict zones from the Middle East to Ukraine, Palantir’s software platforms are now fused into the very decision-making architecture of the U.S. military and its allies.

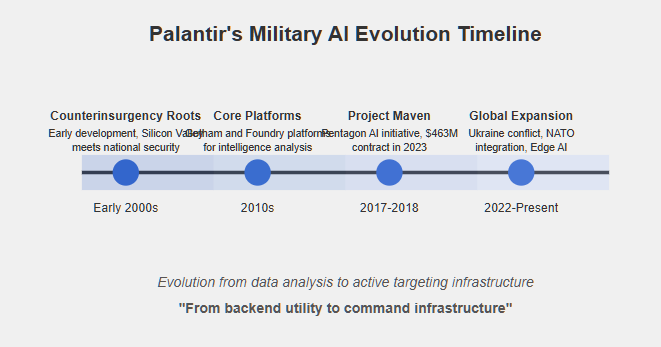

What began as a post-9/11 counterterrorism data tool has evolved into a global battlefield operating system, powering everything from ISR triage in Project Maven to real-time artillery coordination in Ukraine. Palantir doesn’t build weapons; it builds the dashboards that tell those weapons where to go.

Its influence is quiet, distributed, and deeply embedded. And yet, few understand the scope—or the implications—of Palantir’s role in shaping how wars are fought in the age of algorithmic warfare.

This article traces how Palantir military AI transitioned from counterinsurgency analytics to a keystone of kill-chain acceleration. Along the way, it has raised urgent questions about AI ethics, accountability, and the outsourcing of military judgment to software designed by private contractors.

2. Origins — Born in the Shadow of 9/11

Before Palantir military AI became embedded in modern targeting operations, it was born in the aftermath of a national trauma.

Founded in 2003, Palantir Technologies emerged with direct support from the CIA’s venture arm, In-Q-Tel, as a countermeasure to the intelligence failures surrounding the 9/11 attacks. The company’s early mission was to help government agencies integrate fragmented data—disparate surveillance, financial, and communication streams—into something usable, actionable, and visual.

Its first major battlefield test came in Iraq and Afghanistan, where Palantir’s software helped analysts map insurgent networks, track IED patterns, and monitor personnel movement through biometric and HUMINT (human intelligence) data. U.S. Special Operations Forces, particularly those involved in counterinsurgency (COIN) missions, quickly became reliant on Palantir’s Gotham platform for intelligence visualization.

Unlike traditional defense contractors focused on hardware or weapons systems, Palantir inserted itself into the information infrastructure of war. Its engineers worked alongside operators in the field, rapidly iterating on tools to meet immediate tactical needs—turning software into a form of battlefield advantage.

That culture—Silicon Valley agility fused with national security imperatives—set the tone for Palantir’s future. Long before it helped automate targeting decisions, Palantir was already influencing who got captured, who got detained, and who was flagged as a threat.

The company’s counterinsurgency roots laid the groundwork for something much larger: an expanding, privatized role in how modern militaries understand and act on data in real time.

3. The Pivot — From COIN to Project Maven

For over a decade, Palantir’s reputation rested on its battlefield utility during the counterinsurgency era—data fusion, link analysis, threat prediction. But by the mid-2010s, the wars were changing—and so was the U.S. military’s relationship with AI.

In 2017, the Pentagon launched Project Maven, an ambitious initiative to integrate artificial intelligence into its intelligence, surveillance, and reconnaissance (ISR) systems. The goal was to automate the analysis of massive drone footage archives, identifying objects of interest far faster than human analysts could. Initially, Maven partnered with Google, but that relationship quickly collapsed after internal employee protests revealed the company’s quiet involvement in building military AI tools.

That vacuum didn’t last long. In 2018, Palantir stepped in, leveraging its long-standing ties with the national security community—and none of Google’s internal resistance.

By 2023, the Department of Defense had awarded Palantir a $463 million contract to fully support and scale Project Maven. Under Palantir’s stewardship, the project evolved beyond basic image recognition into a real-time battlefield intelligence platform, integrated across CENTCOM, SOCOM, and other combatant commands.

Maven’s AI systems now ingest drone video feeds, satellite imagery, and ISR data to flag vehicles, track personnel, and prioritize targets—functions that directly influence the speed and direction of lethal force.

This marked a decisive shift in the company’s trajectory. Palantir military AI was no longer just assisting analysts after the fact—it was actively embedded in the decision loop of live operations, shaping what warfighters see and how they act.

And while Maven itself has remained classified in many of its technical details, its operational influence has grown quietly but steadily—proving that software doesn’t have to pull the trigger to change who gets targeted.

4. Tools of Influence — Gotham, Foundry & Edge AI

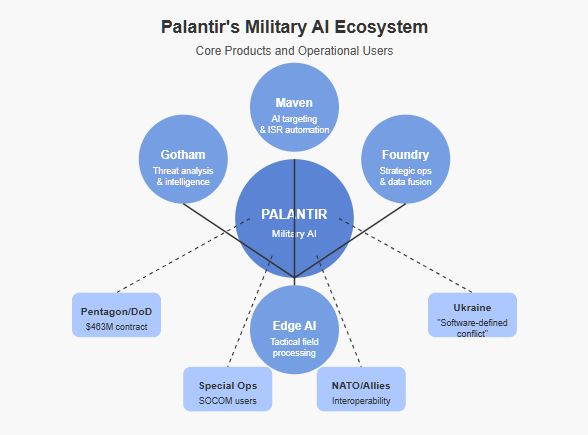

If Project Maven marked Palantir’s entry into real-time targeting pipelines, its software platforms—Gotham, Foundry, and emerging Edge AI kits—are the operational engines that drive its growing battlefield influence.

Gotham, Palantir’s original platform, was designed to help analysts map relationships between people, places, and events—essentially turning data into intelligence. In military contexts, it’s used for threat network analysis, detainee tracking, and geospatial intelligence. U.S. Special Operations Forces and intelligence agencies have relied on Gotham for more than a decade, using it to visualize and interrogate complex threat environments.

Foundry, by contrast, is a broader strategic tool. Originally adopted by commercial and public health sectors, Foundry now plays a growing role in joint command operations, allowing combatant commands to fuse operational data—from logistics to ISR—into a unified decision space. According to reports, Foundry was instrumental in helping Ukrainian forces coordinate battlefield intelligence during the Russian invasion, including artillery strikes and drone recon.

More recently, Palantir has deployed Edge AI kits—portable systems capable of processing ISR data in real time at the tactical edge, without relying on cloud infrastructure. These kits allow forces in the field to process drone feeds, sensor inputs, and object detection locally, and feed that intelligence directly into command platforms like Maven or Gotham.

Together, these platforms represent the core stack of Palantir military AI—a set of tools that doesn’t just support decision-making, but shapes it.

In a modern battlefield dominated by speed, ambiguity, and saturation, Palantir’s software is not just a utility—it’s becoming an operating system for war. And unlike traditional weapons platforms, its influence is often invisible, networked, and persistently embedded.

5. Ethics, Secrecy, and the Accountability Gap

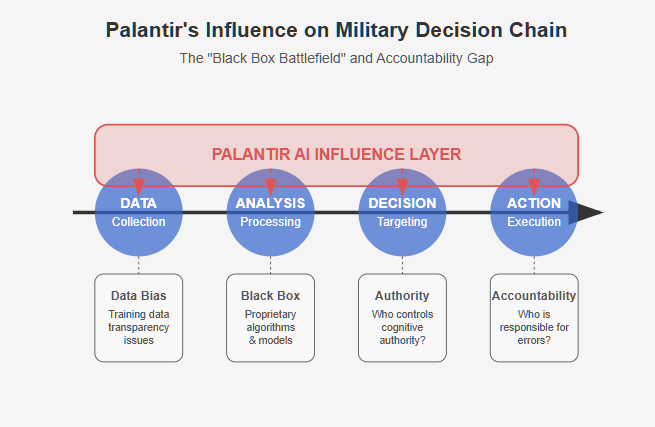

For all its operational value, Palantir military AI exists in a space of profound ambiguity—legally, ethically, and operationally. The company’s platforms are now directly influencing decisions about life and death, yet there is no clear framework for public oversight, transparency, or accountability.

One of the most urgent concerns is the lack of visibility into how Palantir’s AI systems are trained, validated, and deployed. The models used in Project Maven and other applications are proprietary. There is no requirement to disclose error rates, bias in training data, or post-strike assessments linked to algorithmic outputs. As a result, the role that AI plays in targeting—while increasing—is also untraceable.

Moreover, Palantir operates as a private contractor, meaning the chain of responsibility is further diluted. If a mistake occurs—if civilians are targeted, or intelligence is wrong—who is accountable? The analyst? The military commander? The software developer?

The worry isn’t just about rogue autonomy. It’s about systemic opacity—the quiet handoff of cognitive authority from soldiers to software, without clarity about where human judgment ends and algorithmic influence begins.

As Palantir military AI continues to expand its operational reach, the question isn’t whether it’s effective. The question is who gets to know how it works—and who answers when it doesn’t.

6. Expansion — From the Pentagon to Proxy Wars

Palantir’s influence no longer stops at U.S. borders. In recent years, Palantir military AI has become a key export—its software platforms deployed in real-world conflict zones far beyond traditional theaters.

Nowhere is this more evident than in Ukraine. Since the 2022 Russian invasion, Palantir has supported Ukrainian forces with real-time battlefield intelligence, reportedly helping coordinate artillery strikes, drone reconnaissance, and even predictive modeling for enemy troop movements. Palantir CEO Alex Karp has framed the war as a proving ground for the company’s technology, calling Ukraine “a software-defined conflict.”

Ukrainian operators have been seen using Foundry and Gotham platforms to fuse data from satellite imagery, drone feeds, and human intelligence—all under intense battlefield conditions. While full details remain classified, Ukraine represents a powerful test case for AI-assisted, networked warfighting at scale.

Meanwhile, Palantir is extending its footprint into NATO interoperability frameworks and is reportedly engaged in shaping the Joint All-Domain Command and Control (JADC2) infrastructure. These efforts aim to connect allied forces across air, land, sea, space, and cyber domains, creating a real-time battle network that will heavily rely on AI to ingest, prioritize, and route actionable intelligence.

In short, Palantir military AI is no longer a backend utility—it is fast becoming the command infrastructure of AI-enabled warfare. Its role in proxy conflicts and alliance coordination illustrates not just market dominance, but strategic alignment with the future of multinational military operations.

Palantir doesn’t just sell software. It exports algorithmic advantage—and that may be the most powerful capability of all.

7. Conclusion — Invisible Infrastructure, Visible Power

Palantir military AI doesn’t fire weapons—but it increasingly decides what warfighters see, how they interpret threats, and when they act.

From counterinsurgency operations to Project Maven and the war in Ukraine, Palantir has positioned itself not as a weapons manufacturer, but as the invisible architecture behind the kill chain. Its tools compress decisions, frame perceptions, and filter the chaos of war through code.

In the age of algorithmic warfare, power isn’t just measured in missiles—it’s measured in data, influence, and access to the decision loop.

And few actors have more access right now than Palantir.

2 thoughts on “The Rise of Palantir Military AI: From Counterinsurgency to Kill Chains”