Introduction: AI and the Evolution of the Military Kill Chain

In modern warfare, the speed at which a target is detected, confirmed, and neutralized can determine the outcome of an entire conflict. This rapid progression—from identifying a threat to executing a strike—is known as the kill chain. Traditionally managed by human operators using a combination of intelligence, surveillance, and tactical judgment, the kill chain is now undergoing a profound transformation. Artificial Intelligence (AI) is being integrated into nearly every phase of this process, accelerating decision-making and enabling more complex, automated responses on the battlefield.

From real-time satellite surveillance and drone reconnaissance to algorithmic target prioritization and autonomous weapons deployment, AI systems are reshaping what lethality looks like in the 21st century. Projects like Maven, autonomous systems like Turkey’s Kargu-2, and conflicts such as Ukraine’s defense against Russian aggression provide a glimpse into a future where machines help prosecute—or potentially initiate—acts of war.

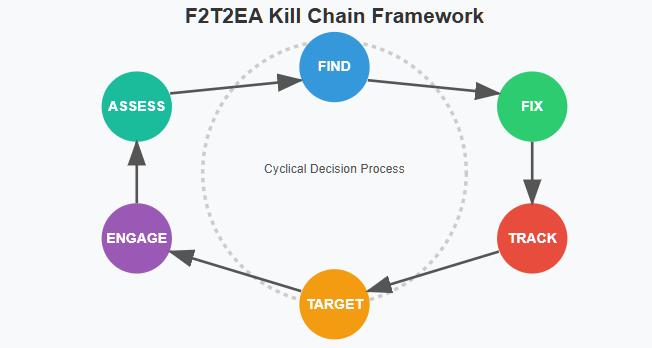

But what exactly does AI change at each step of the kill chain? And what are the implications for ethics, accountability, and global security? This explainer breaks down how AI integrates into the classic F2T2EA (Find, Fix, Track, Target, Engage, Assess) model—highlighting both its tactical advantages and its destabilizing risks.

What Is the Kill Chain? The F2T2EA Model Explained

The kill chain is a conceptual framework used by militaries to describe the sequential steps required to successfully locate and neutralize a target. The most widely adopted version is the F2T2EA model—short for Find, Fix, Track, Target, Engage, and Assess. Each step represents a critical decision point in the cycle of lethal action, from the initial detection of a potential threat to the post-strike evaluation of success or failure.

- Find: Detect the presence of a potential target using ISR (Intelligence, Surveillance, Reconnaissance) systems.

- Fix: Pinpoint the target’s location and confirm its identity.

- Track: Monitor the target’s movements and maintain persistent awareness.

- Target: Select the appropriate strike option based on tactical and strategic objectives.

- Engage: Deliver kinetic (or non-kinetic) effects to neutralize the target.

- Assess: Analyze post-strike data to determine mission effectiveness.

Originally designed for human-led operations, the kill chain is now being reengineered with AI-driven capabilities that can dramatically compress time, increase precision—and reduce human oversight.

Project Maven and the Foundations of AI-Driven Targeting

Launched in 2017 by the U.S. Department of Defense, Project Maven marked a pivotal moment in the military adoption of artificial intelligence. Its initial mission: use AI to help analysts sift through the massive volumes of full-motion video captured by drones and ISR platforms, identifying vehicles, objects, and potential threats with machine learning.

Maven’s goal was to accelerate intelligence workflows, reduce analyst fatigue, and shorten the time between sensor input and actionable insight. By integrating computer vision algorithms into battlefield surveillance, it became one of the first major efforts to plug AI into the early phases of the kill chain—particularly the “Find” and “Fix” stages.

The project became a lightning rod for ethical controversy when Google, an early contractor, withdrew after employee protests. Still, Maven laid the groundwork for broader AI integration in military targeting systems, influencing platforms across NATO and partner nations—and raising urgent questions about accountability, transparency, and escalation risk in automated warfare.

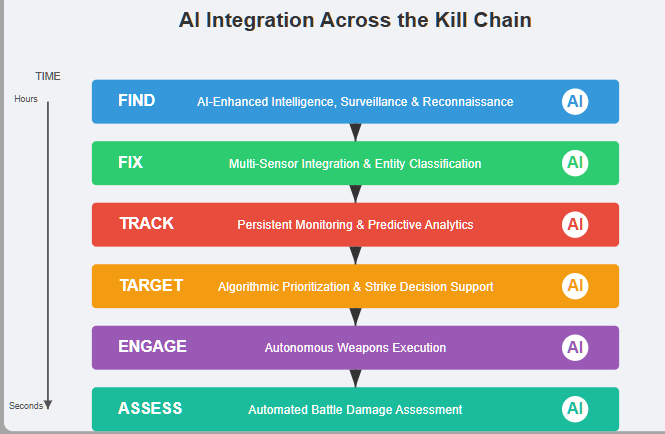

AI in the Kill Chain: Step-by-Step Integration

Artificial intelligence is no longer a support tool—it’s becoming an operational actor across the military kill chain. From identifying targets to guiding munitions and assessing battle damage, AI systems are now embedded at every stage of the F2T2EA process. Below is a breakdown of how AI enhances, accelerates, and sometimes replaces human roles across each step:

1. Find – AI-Enhanced Intelligence, Surveillance & Reconnaissance (ISR)

AI significantly expands the speed and scale of ISR operations. Algorithms can process satellite imagery, drone feeds, and radio frequency (RF) signatures in real time, identifying patterns that human analysts might miss.

- Project Maven and systems like Palantir’s MetaConstellation use computer vision and large-scale sensor fusion to automate target detection across multi-domain inputs.

- AI-driven natural language processing (NLP) is also used to analyze OSINT and intercepted communications for early threat signals.

AI Impact: Accelerates situational awareness and vastly increases coverage of operational environments.

2. Fix – Confirming Identity and Location

Once a potential target is identified, AI systems help confirm its location and classify it with high confidence.

- Machine learning models integrate data from multiple sensors—thermal, radar, EO/IR—to eliminate uncertainty.

- AI-powered facial recognition and object classification are used to match detected entities to watchlists or threat databases.

AI Impact: Reduces ambiguity and enables higher-confidence targeting decisions in complex environments.

3. Track – Persistent Target Monitoring

AI enables real-time tracking of mobile targets using predictive analytics and behavior modeling.

- Autonomous drones and AI-coordinated sensor swarms can maintain continuous surveillance, even under GPS denial or contested conditions.

- Advanced pattern recognition allows AI to forecast a target’s likely movement path, informing preemptive maneuvers.

AI Impact: Maintains “eyes on” without human fatigue and reduces target loss in fluid combat zones.

4. Target – Algorithmic Prioritization and Strike Decision Support

AI plays a growing role in selecting targets and recommending strike options.

- Decision-support systems rank targets by threat level, strategic value, or rules of engagement (ROE) criteria.

- Some platforms allow AI to suggest or queue strike packages based on evolving battlefield conditions.

AI Impact: Enhances tactical decision-making but raises serious questions about algorithmic bias, transparency, and ethical use.

5. Engage – Lethal Execution with Autonomous Weapons

At this stage, AI moves from advisory roles to direct control in some systems.

- Loitering munitions like Turkey’s Kargu-2 and Israel’s Harpy are capable of autonomous target engagement.

- AI-enabled munitions make split-second decisions on when and how to strike, often with minimal human oversight.

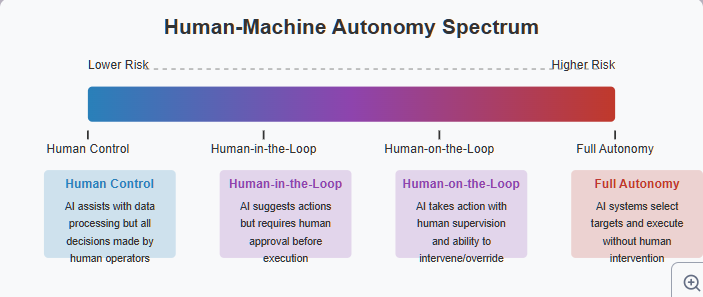

- Systems are categorized by autonomy levels:

- Human-in-the-loop (approval required),

- Human-on-the-loop (monitoring),

- Human-out-of-the-loop (full autonomy).

AI Impact: Raises the most urgent concerns about accountability, misidentification, and loss of human control in lethal operations.

6. Assess – Automated Battle Damage Assessment (BDA)

AI systems rapidly assess the effects of a strike using post-mission imagery and sensor feedback.

- Computer vision compares before-and-after imagery to estimate target destruction or mission success.

- AI-driven ISR loops can reassign assets for follow-up action autonomously.

AI Impact: Speeds up re-engagement cycles and supports dynamic mission planning.

Case Studies: How AI-Enabled Kill Chains Are Reshaping Conflicts

AI-driven kill chains are no longer theoretical—they’re active on today’s battlefields. Below are four real-world examples that reveal how AI systems are influencing military outcomes, shaping doctrine, and pushing ethical boundaries:

1. Libya (2020): Kargu-2 and the First Alleged Autonomous Strike

According to a UN Panel of Experts report, a Turkish-made Kargu-2 loitering munition may have conducted an autonomous lethal engagement during the Libyan civil war. The drone, deployed by the GNA, reportedly pursued retreating LNA forces without a direct human command link.

If confirmed, this incident would mark the first known instance of an autonomous weapon system selecting and engaging a human target without human oversight—a potential watershed moment in warfare history.

Implications: Raises urgent questions about accountability, rules of engagement, and the threshold for autonomous lethality in asymmetric warfare.

2. Ukraine: AI-Enhanced ISR and Decentralized Targeting

Ukrainian forces have leveraged AI-coordinated drones and satellite feeds to rapidly locate and strike Russian positions. Civilian tech like Starlink enables near real-time coordination across units, effectively compressing the kill chain from hours to minutes.

Implications: Demonstrates how low-cost AI and COTS tech can rival advanced state military systems in dynamic conflict zones.

3. China: “System Destruction Warfare” Doctrine

China’s PLA emphasizes preemptive disruption of enemy kill chains through AI-enabled cyber attacks, jamming, and deception. Their strategy treats the enemy’s C4ISR network as a primary target—seeking to blind and fragment decision-making before kinetic conflict begins.

Implications: AI is not only part of the kill chain—it’s used to neutralize it.

4. DARPA Mosaic Warfare: Modular AI Kill Chains

The U.S. Defense Advanced Research Projects Agency (DARPA) is developing Mosaic Warfare, where AI dynamically assembles and reconfigures kill chains using distributed assets. Instead of a linear process, kill chain roles (sensor, shooter, commander) can be reassigned in real-time across platforms.

Implications: Future kill chains may be nonlinear, swarm-based, and dynamically recomposed, with AI serving as the orchestrator.

Risks and Red Lines: AI, Acceleration, and Escalation

As AI systems accelerate the military kill chain, they also introduce new strategic and ethical risks—some of which could destabilize global security frameworks.

One of the most pressing dangers is the emergence of “flash war” scenarios, where AI-enabled systems detect threats and authorize strikes faster than humans can intervene or de-escalate. In high-stakes environments—such as disputed airspace or contested cyber domains—milliseconds matter, and automated miscalculations could trigger unintended conflicts.

Moreover, AI introduces opacity into lethal decision-making. Black-box algorithms that prioritize targets or assess threat levels often lack explainability, making accountability nearly impossible when errors occur. This is especially dangerous in urban warfare or hybrid conflicts, where civilian-military distinctions are blurred.

Compounding this is the regulatory vacuum: there’s currently no binding international treaty governing the use of lethal autonomous weapons (LAWs). Without common standards, states may push for speed and capability over caution—crossing ethical and legal red lines in the process.

The Future: Modular Kill Chains, Human-AI Teaming, and Arms Control Challenges

The kill chain of the future will be modular, adaptive, and increasingly AI-orchestrated. Instead of rigid, linear processes, emerging concepts like DARPA’s Mosaic Warfare envision a battlefield where sensors, shooters, and decision nodes are interchangeable and dynamically reassigned in real time by AI systems.

This evolution will likely center on human-AI teaming, where machines handle speed and complexity, while humans maintain strategic intent and legal accountability. However, the lines are already blurring.

At the same time, the international community is scrambling to keep pace. Efforts to regulate lethal autonomous weapons (LAWs)—including via the UN’s CCW process—have stalled, with major powers divided on definitions and limits.

The strategic race is clear: nations that can optimize AI across the kill chain gain decisive tactical advantages. The real question is whether global governance can evolve quickly enough to prevent catastrophic misuse.

Conclusion: The Algorithmic Edge—But at What Cost?

AI is rapidly reshaping the logic of lethality. By accelerating and automating the kill chain, militaries can act faster, strike smarter, and operate with unprecedented efficiency. But that same speed introduces systemic risk—compressing decision time, obscuring accountability, and enabling autonomous action without clear human oversight.

The kill chain is no longer just a tactical sequence—it’s becoming a software-defined battlespace, where algorithms decide who lives and who dies.

As AI continues to plug into every phase of warfare, the central challenge remains: Can we retain human judgment in systems designed for machine speed? Or will algorithmic advantage outrun ethical restraint?

5 thoughts on “What Is a Kill Chain? How AI Is Transforming Military Targeting and Lethal Operations”