Introduction: The Law Can’t See the Algorithm

The rise of autonomous weapons is forcing a reckoning with international law. As battlefield AI evolves from intelligence support to lethal force, the legal systems designed to govern warfare are lagging dangerously behind.

At the center of this growing gap are lethal autonomous weapons legal issues—questions the Geneva Conventions and traditional International Humanitarian Law (IHL) weren’t built to answer. These frameworks assume that a human makes the final decision to pull the trigger. But what if a machine does?

From AI-powered drones that choose targets to automated battle damage assessments used to justify follow-up strikes, the rules of war are being rewritten in code. And without clear accountability, no one—not the commander, not the coder—may be legally responsible for an autonomous weapon’s actions.

This briefing explores how lethal AI is outpacing the laws meant to restrain it—and what must happen before lawfare fails entirely.

What the Geneva Conventions Actually Cover—and Don’t

The Geneva Conventions and their Additional Protocols are the backbone of International Humanitarian Law (IHL). They were designed to regulate conduct in armed conflict, protect civilians, and ensure accountability for war crimes. But they were written for human combatants, not autonomous systems executing decisions at algorithmic speed.

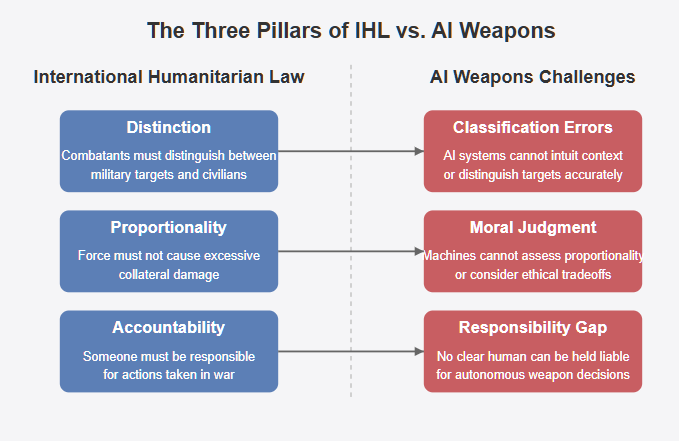

IHL rests on three core principles:

- Distinction — Combatants must distinguish between military targets and civilians.

- Proportionality — Force must not cause excessive collateral damage relative to the anticipated military advantage.

- Accountability — Someone must be responsible for actions taken in war.

The problem? Lethal autonomous weapons challenge all three.

AI systems don’t possess intent, can’t intuit context, and operate without moral judgment. When a machine classifies a vehicle as a threat and engages it, can it assess proportionality? If the classification is wrong, who’s liable?

Critically, there is no formal legal definition of lethal autonomous weapons under IHL. That absence leaves states free to interpret the law based on national policy—and opens the door to asymmetric use of autonomy, especially in conflicts where speed and plausible deniability are assets.

In short: the law assumes a human makes the decision. The tech no longer guarantees it.

The Accountability Black Hole: Who’s Responsible When AI Kills?

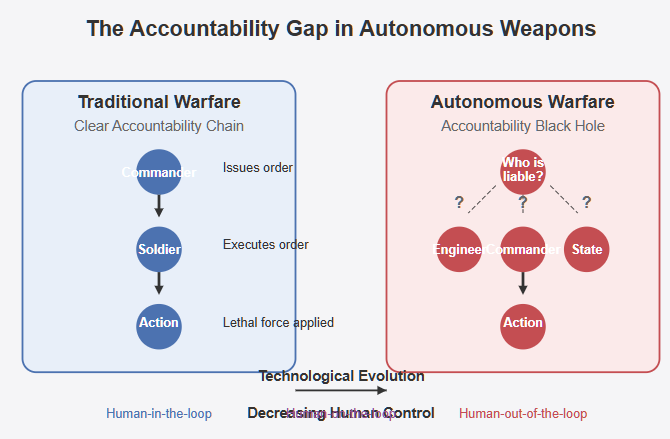

In conventional warfare, accountability is clear: a commander orders a strike, a soldier pulls the trigger, and both can be held liable for unlawful actions. But with lethal autonomous weapons, accountability becomes murky—sometimes disappearing entirely.

As autonomy increases, we move from human-in-the-loop (direct control) to human-on-the-loop (oversight) to human-out-of-the-loop (no intervention). In the last case, an AI system could select and engage a target without any real-time human involvement.

If such a system makes a lethal error—say, misclassifying a civilian vehicle as a military target—who’s to blame?

- The military commander who deployed it?

- The software engineer who designed the targeting algorithm?

- The defense contractor who supplied the system?

- Or the state that approved its use?

This dilemma is often referred to as the “responsibility gap”—a term coined by legal scholars to describe situations where no clear human actor can be held liable under current legal frameworks.

International courts require intent, knowledge, and foreseeability to assign legal blame. But when AI systems are opaque—even to their creators—those standards break down.

Without structural accountability, lethal autonomous weapons risk becoming tools of untraceable violence—enabling plausible deniability at machine speed.

Case Studies: Legal Gray Zones in Action

The legal ambiguity surrounding lethal autonomous weapons isn’t theoretical—it’s already playing out on the battlefield. Below are three high-profile cases that illustrate how international law struggles to catch up with AI in combat.

▪ Libya (2020): Kargu-2 Loitering Munition

According to a UN Panel of Experts, a Turkish-made Kargu-2 drone may have engaged targets autonomously during the Libyan civil war. No clear command link was identified. If the drone made a lethal decision independently, was that a lawful use of force—or a war crime with no clear perpetrator?

▪ Ukraine: Autonomous Targeting and Battle Damage Assessment

Ukrainian forces have used AI to assist in target acquisition and post-strike damage analysis. If a machine misidentifies a civilian object as a valid target, does it violate Article 51 of Additional Protocol I, which prohibits indiscriminate attacks? Where’s the human accountability?

▪ Israel’s AI “Kill List” System

Reports suggest that Israel’s AI-supported targeting systems accelerate kill chain processing, raising concerns over whether human review is still meaningful. If the system prioritizes speed over legal review, do strikes still meet proportionality and necessity standards?

Each of these cases shows the legal gray zone widening—and the accountability structure eroding—as AI plays a larger role in lethal operations.

The Collapse of Consensus: Why the CCW Is Stuck

The international community has spent over a decade debating how to regulate lethal autonomous weapons, primarily under the auspices of the UN Convention on Certain Conventional Weapons (CCW). Yet despite mounting concern, no binding agreement has emerged in relation to AI governance.

The core problem is political, not technical. States remain deeply divided:

- Countries like Austria, Chile, Brazil, and New Zealand advocate for a preemptive ban on fully autonomous weapons.

- Others—including the United States, Russia, China, and Israel—support the idea of “meaningful human control” but oppose any treaty that limits military innovation or strategic flexibility.

Efforts to define what even constitutes a lethal autonomous weapon have stalled. The term “autonomous” itself is contested, and enforcement mechanisms remain vague or nonexistent.

The result is a deadlocked process: no universal standards, no agreed thresholds for autonomy, and no legal framework to govern systems that are already being tested and deployed.

As states accelerate their AI military programs, the regulatory gap is growing wider—leaving the future of warfare to be shaped by unilateral norms, not global consensus.

What a Real AI Weapons Treaty Would Require

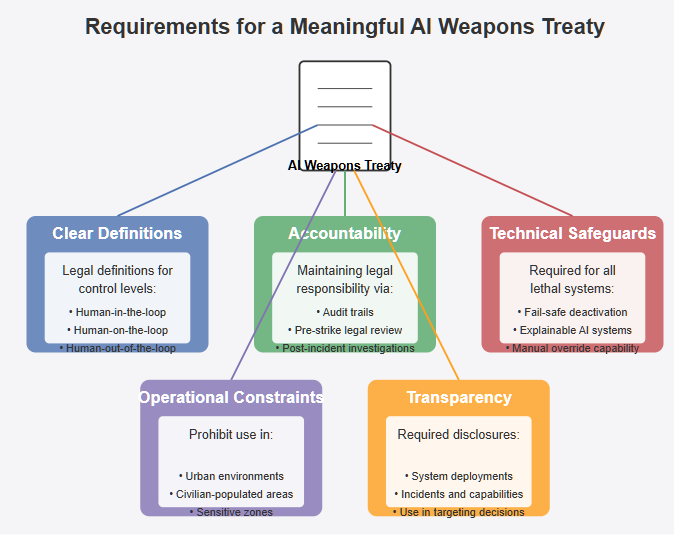

If the international community wants to prevent a future where autonomous systems make unaccountable decisions to kill, it will need more than vague principles—it will need binding rules. A meaningful treaty governing lethal autonomous weapons legal issues must address the following:

Clear Definitions

Establish legally binding thresholds for human-in-the-loop, on-the-loop, and out-of-the-loop systems. Define what constitutes “lethal autonomy” under international law.

Accountability Mechanisms

Mandate that states retain legal responsibility for any deployment of autonomous weapons. Implement audit trails, pre-strike legal review, and post-incident investigations with machine-action logs.

Technical Safeguards

Require that all lethal systems include:

- Fail-safe deactivation switches

- Explainability protocols (ability to interpret AI decisions post hoc)

- Manual override mechanisms

🔹 Operational Constraints

Prohibit use of fully autonomous weapons in urban or civilian-populated environments. Enforce time delays or human confirmation requirements in strategically sensitive zones.

Transparency and Reporting

Oblige states to disclose deployments, incidents, and system capabilities—especially when AI is used in targeting or lethal decision-making.

Without these measures, autonomous warfare will continue to evolve without legal guardrails, increasing the risk of unaccountable violence at machine speed.

Conclusion: No Law, No Limits

~115 words

The Geneva Conventions were built to restrain human behavior in war—not to regulate software deciding who lives and who dies. But as AI increasingly assumes roles once held by soldiers and commanders, we are entering a world where lethal force can be applied without intent, context, or accountability.

Without updated legal frameworks, lethal autonomous weapons legal issues will continue to erode the foundational principles of international humanitarian law. The result isn’t just a legal gray zone—it’s a vacuum of responsibility, where no one can be held accountable for what the machine has done.

Unless the global community acts, we risk outsourcing the ethics of war to black-box algorithms—and abandoning the rules of war entirely.

Lethal Autonomous Weapons and Legal Gray Zones arewhy the Geneva Conventions Are Falling Behind