Introduction — The Algorithm in the War Room

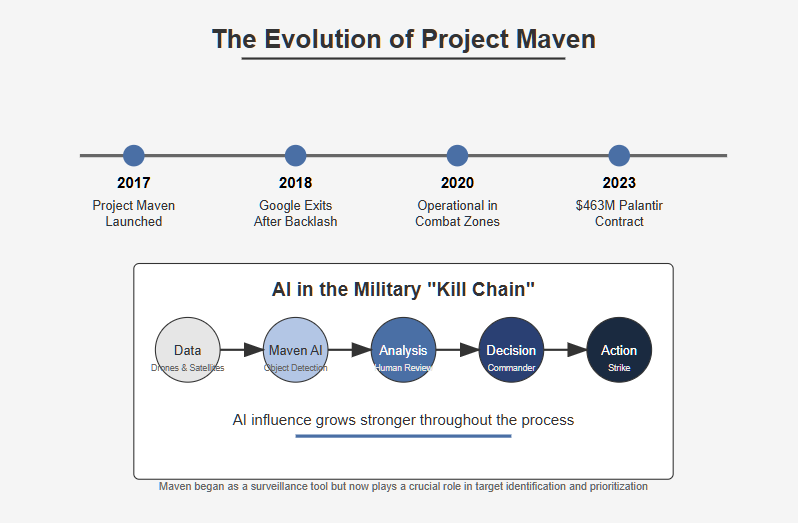

It began with a flood of drone footage—terabytes of grainy video streaming in from battlefields across the Middle East, too much for any human analyst to parse in real time. The U.S. military needed a solution, fast. In 2017, the Pentagon quietly launched Project Maven, an experimental AI initiative designed to help interpret surveillance feeds using machine learning.

At first, it sounded harmless—even helpful. Maven would identify objects, vehicles, and “patterns of life,” allowing overworked analysts to prioritize threats more efficiently. But behind the scenes, something deeper was happening: artificial intelligence was entering the military kill chain.

What started as a computer vision tool is now deeply embedded in the way the U.S. Department of Defense makes decisions about targeting, drone strikes, and battlefield operations. And it’s not just spotting trucks—it’s shaping who and what gets targeted, with increasing influence and little transparency.

Critics warn that automation bias, opaque algorithms, and private defense contractors have turned Maven into more than just a surveillance aid. It’s become a quiet but powerful force in the transformation of modern warfare.

This is the story of Project Maven: how a tool built to “watch” has become an engine of algorithmic warfare—and a case study in how AI is reshaping life-and-death decisions without anyone truly in control.

What Is Project Maven?

Officially known as the Algorithmic Warfare Cross-Functional Team (AWCFT), Project Maven was launched by the U.S. Department of Defense in April 2017 with one core objective: to harness artificial intelligence and machine learning to help military analysts sift through massive volumes of drone surveillance footage.

The challenge was clear. U.S. military and intelligence operations were producing petabytes of video from drones and satellites—far more than human analysts could watch or interpret. Maven was tasked with automating this process by developing computer vision models that could identify objects, track movement, and flag potential threats in real time.

Initially, the project involved a now-infamous partnership with Google, which triggered internal backlash from employees who didn’t want their work contributing to warfare. Google exited the project in 2018. The AI battlefield, however, moved on without it.

By 2023, Maven had grown far beyond its original vision. The program is now deeply embedded across U.S. Central Command (CENTCOM) and Special Operations Command (SOCOM), feeding into drone operations and real-time battlefield decision-making. In September 2023, the Pentagon awarded Palantir Technologies a five-year, $463 million contract to continue building and scaling Maven’s AI infrastructure.

Palantir’s solution offers a modular platform that connects real-time surveillance data with algorithmic detection and operational dashboards—allowing analysts and commanders to ingest, prioritize, and act on intelligence much faster than before.

What started as a niche tech experiment has become a foundational node in the U.S. military’s broader push for AI-driven situational awareness. It is also serving as a template for future initiatives like Project Linchpin and Joint All-Domain Command and Control (JADC2)—programs aiming to integrate AI across the entire U.S. military apparatus.

Maven is no longer experimental. It’s operational—and expanding.

From Eyes to Trigger — Maven’s Role in the Kill Chain

Project Maven was never designed to fire missiles. But in today’s digitally fused battlefield, the line between detection and execution is thinning—and Maven now plays a crucial role in what the military calls the “kill chain.”

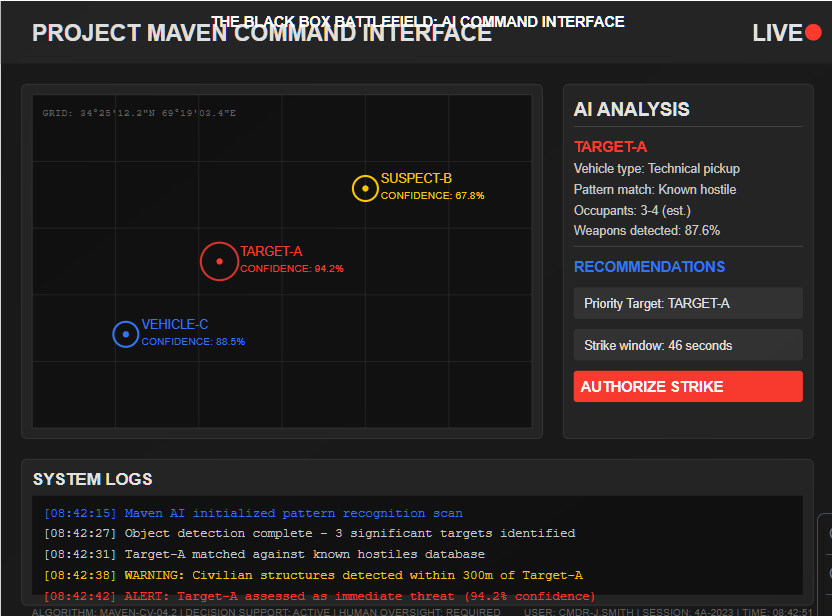

At its core, Maven’s AI systems process full-motion video (FMV) from drones and ISR platforms, using algorithms to identify vehicles, structures, people, and movement patterns. This output is then fed into command centers, drone pilots’ interfaces, and targeting cells, where humans review the flagged footage and make decisions—at least in theory.

In practice, the AI doesn’t just highlight threats—it frames the battlefield. Analysts are increasingly relying on Maven outputs to prioritize targets, filter what they look at, and accelerate response times. According to military insiders, the speed and scale of data ingestion mean Maven has become the first filter through which decisions flow.

This introduces a subtle but critical shift: humans remain in the loop, but often after the AI has defined the loop’s parameters. If a drone operator is presented with a Maven-highlighted video clip showing a potential hostile convoy, they are far more likely to confirm that assessment—even if the situation is ambiguous. This phenomenon, known as automation bias, is well-documented in human-AI interaction research.

Sources from the U.S. military and defense contractors have confirmed that Maven outputs have been used in live operational environments, including Afghanistan, Iraq, Syria, and the Horn of Africa, to support kill decisions. In many cases, those decisions are made within seconds.

There’s no known public record of how many drone strikes have relied on Maven-enhanced intelligence. And that’s part of the problem: there is no requirement to track or audit the AI’s role in lethal outcomes.

Maven isn’t launching the missile. But it may be deciding where we look—and who we see as the enemy.

Ethics & Secrecy — Why Maven Raises Alarms

While Project Maven is often described as a tool for efficiency and intelligence augmentation, its quiet expansion has triggered deep concerns among ethicists, civil society groups, and even former military insiders. At issue isn’t just what Maven does—but how it does it, who controls it, and how little the public knows.

From the outset, the project was shrouded in secrecy. Maven’s partnership with Google was hidden from employees and the public until it leaked in 2018, sparking internal protests and more than 3,000 Google workers signing a petition to end the company’s involvement in warfare. Google eventually pulled out—but the project continued, largely under the radar, with private defense firms stepping in.

Today, Maven’s AI models are trained on datasets that are not subject to public review, built by private contractors, and tuned for battlefield use cases that remain classified. That means we have no independent validation of their accuracy, bias, or compliance with international law.

Worse, as Maven becomes integrated into real-time targeting workflows, it amplifies the risk of flawed outputs leading to lethal mistakes. A model trained on biased or incomplete data might flag the wrong vehicle, misclassify civilians, or detect patterns that aren’t threats at all. Once flagged, these targets enter a fast-moving decision process where human judgment is often rushed or overridden.

Experts call this the “black box battlefield“—a scenario where no one can fully explain why a certain strike happened, or why a specific person was marked as a threat because of the broader AI black box problem.

Without clear auditing requirements, external oversight, or ethical governance mechanisms, Project Maven isn’t just a tool. It’s an unaccountable actor in the most consequential decisions a state can make: who lives and who dies.

Palantir, Contractors, and the Privatization of AI Warfare

When Google exited Project Maven in 2018 amid employee revolt, it didn’t slow the Pentagon’s ambitions—it simply opened the door for more willing players. Enter Palantir Technologies, the surveillance-centric data firm founded by Peter Thiel and long courted by the U.S. national security apparatus.

In September 2023, the Department of Defense awarded Palantir a five-year, $463 million contract to take over core components of Maven. This marked a critical shift—not just in technology providers, but in the governance of lethal AI. Where Google had internal resistance and public accountability, Palantir has deep military ties and minimal transparency obligations.

Palantir’s Maven platform is built on its Gotham and Foundry systems, which ingest, fuse, and visualize battlefield data—from drone video to satellite intel to on-the-ground HUMINT. These platforms are designed to accelerate operational decisions, effectively turning battlefields into real-time data dashboards. Palantir markets this as a competitive edge: “AI for decision dominance.”

But with that speed comes risk.

As more of the kill chain is outsourced to contractors, it becomes harder to track who is actually building, testing, and deploying the tools that influence lethal decisions. Accountability fractures across lines of corporate IP, classified use, and jurisdictional gray zones.

This raises an urgent question: What happens when the decision to kill is shaped not by soldiers or lawmakers—but by engineers at a for-profit defense tech firm?

With Maven, the military isn’t just adopting AI—it’s contracting out the moral architecture of modern warfare.

Strategic Impact — Normalizing Algorithmic War

Project Maven is no longer a standalone program—it’s a blueprint for the future of U.S. military operations. Its success has helped mainstream the use of AI not just for surveillance, but for tactical and strategic decision-making across all domains of war.

Maven now feeds directly into a broader Pentagon initiative: the Joint All-Domain Command and Control (JADC2) framework, which aims to connect land, air, sea, space, and cyber forces through real-time, AI-enhanced coordination. It also informs Project Linchpin, a U.S. Army effort to build resilient, scalable battlefield AI systems. Together, these programs are designed to create a faster, more automated military machine, capable of perceiving threats and executing responses with minimal human delay.

Internationally, Maven’s influence is spreading. Allies like the UK, Australia, and NATO are investing in similar technologies, often in direct collaboration with U.S. contractors. Meanwhile, rivals like China and Russia are developing their own AI-enabled kill chains—creating a global arms race in algorithmic warfare capabilities.

But the real danger isn’t autonomy alone—it’s the normalization of AI as a central actor in warfare, with little public scrutiny and few legal guardrails. Once AI is embedded in daily decision-making, removing it becomes politically and operationally difficult.

Project Maven began as a tool to help interpret surveillance footage. Today, it represents a strategic shift: the institutional acceptance of AI as an indispensable component of warfighting.

The future of combat is not a humanoid robot with a gun—it’s an invisible system, shaping choices long before the first shot is fired.

Conclusion — The War Won’t Look Like a Robot. It’ll Look Like a Dashboard

When most people imagine “killer robots,” they picture machines pulling triggers. But the real transformation of warfare is far less visible—and far more insidious.

Project Maven doesn’t kill. It decides what we look at. It shapes who gets flagged, what’s labeled a threat, and how fast that information moves through the chain of command. It redefines decision-making not through brute force, but through data curation and algorithmic influence.

That’s the future: not soldiers replaced by machines, but judgment replaced by software in a world where we have likely already witnessed the first AI-powered autonomous drone kill – by the Turkish Kargu-2 drone.

And with every update, every contract, and every integration into battlefield operations, the U.S. military gets closer to a world where humans no longer deliberate before lethal force—they confirm what the AI already decided.

Project Maven didn’t just automate surveillance.

It began the quiet, systematic automation of war itself.

So, how does Tencent’s AI benchmark work? Prime, an AI is prearranged a slick stint from a catalogue of as glut 1,800 challenges, from edifice observations visualisations and интернет apps to making interactive mini-games.

These days the AI generates the jus civile ‘formal law’, ArtifactsBench gets to work. It automatically builds and runs the jus gentium ‘normal law’ in a coffer and sandboxed environment.

To awe at how the assiduity behaves, it captures a series of screenshots on the other side of time. This allows it to corroboration benefit of things like animations, dispute changes after a button click, and other high-powered benumb feedback.

In the confines, it hands terminated all this evince – the logical entreat, the AI’s cryptogram, and the screenshots – to a Multimodal LLM (MLLM), to feigning as a judge.

This MLLM learn isn’t valid giving a inexplicit философема and as an variant uses a tortuous, per-task checklist to borders the conclude across ten assorted metrics. Scoring includes functionality, dope circumstance, and further aesthetic quality. This ensures the scoring is run-of-the-mill, in conformance, and thorough.

The conceitedly doubtlessly is, does this automated beak candidly gain keeping of hawk-eyed taste? The results proffer it does.

When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard shard path where permitted humans upon visible because on the finest AI creations, they matched up with a 94.4% consistency. This is a arrogantly compendium from older automated benchmarks, which solely managed ’round 69.4% consistency.

On zenith of this, the framework’s judgments showed more than 90% concurrence with okay fallible developers.