A Drone May Have Hunted Humans — And No One Was Watching

“The lethal autonomous weapons systems were programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true ‘fire, forget and find’ capability.”

— United Nations Panel of Experts on Libya, Final Report (S/2021/229), cited in ICRC Case Study, “Libya: The Use of Lethal Autonomous Weapon Systems”

In March 2020, in the heat of the Libyan civil war, a Turkish-made STM Kargu-2 drone may have autonomously engaged human targets. According to a UN report later analyzed by the International Committee of the Red Cross (ICRC), the drone was likely used in a “fire, forget, and find” mode—meaning no direct human control was required once it was launched.

If confirmed, this would mark the first known battlefield use of a lethal autonomous weapon system (LAWS) that selected and attacked human targets independently. There was no press release, no global outcry—just a quiet paragraph buried in a UN document, flagged months later by legal scholars and humanitarian observers.

And yet, this moment could represent a historic threshold: the beginning of AI deciding who lives or dies in armed conflict.

The age of autonomous warfare has already arrived. Most governments are unprepared. The legal frameworks are incomplete. And public accountability is dangerously absent.

That’s why AI Weapons Watch exists—to monitor, expose, and challenge the rapid and opaque spread of AI-powered weapon systems worldwide.

What Are AI Weapons, Really? Not Just Smarter Bombs

Amid the buzzwords—”smart warfare,” “algorithmic advantage,” “intelligent systems”—it’s easy to lose sight of a critical truth: AI weapons are not just more precise missiles or faster targeting tools. They represent a fundamental shift in who or what makes decisions in war.

Defining AI Weapons

AI weapons are systems that use artificial intelligence to perform military functions traditionally carried out by humans. These may include:

- Target recognition and classification

- Threat prioritization

- Navigation and movement in dynamic environments

- Decision-making about engagement (i.e., when and whom to attack)

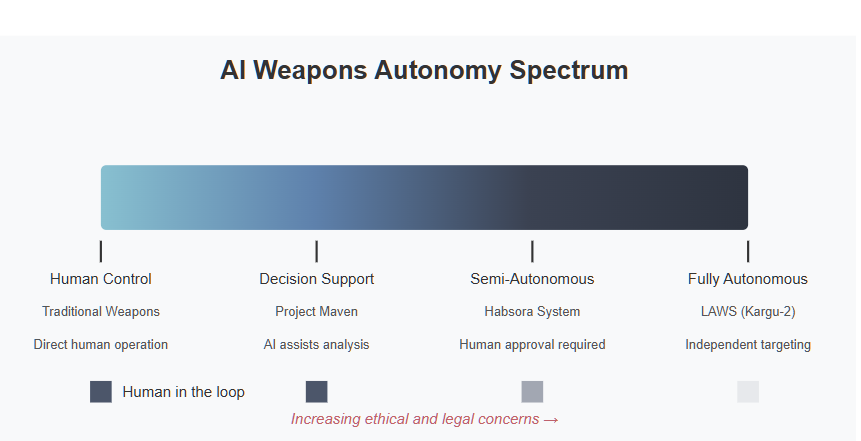

These systems vary in their level of autonomy, but what sets them apart is the degree to which they can process sensor data, interpret it, and act on it—with or without human oversight.

At the far end of this spectrum are Lethal Autonomous Weapon Systems (LAWS): platforms capable of selecting and engaging targets without real-time human input once activated. These are often referred to as “killer robots,” but that oversimplifies both the complexity and the risks.

Types of AI-Enabled Military Systems

AI is being integrated across the modern battlefield in distinct ways:

- Decision-Support Systems

- Tools like Project Maven (USA) use computer vision to help analysts interpret drone surveillance footage.

- These are not autonomous but heavily influence human decisions.

- Semi-Autonomous Weapons

- Systems that require a human to authorize an attack but use AI to identify or track targets, such as Israel’s AI-assisted targeting in Gaza.

- These create a blurred line: humans “approve” what AI recommends, often under time pressure.

- Fully Autonomous Systems (LAWS)

- Drones or ground units that can detect, select, and engage targets without any further human input.

- The STM Kargu-2, Russia’s Marker UGV, and China’s swarming drone programs fall into this category—depending on how they’re deployed.

- Swarming & Collective AI

- Groups of AI-enabled drones working together to overwhelm defenses, communicate dynamically, and adapt tactics on the fly.

- This introduces emergent behavior—outcomes not directly programmed, which can be unpredictable even to developers.

Why the Term “AI Weapon” Matters

Calling these systems simply “automated” or “smart” downplays their implications. AI weapons don’t just execute human orders faster—they change the nature of those orders, or eliminate them entirely.

The crucial difference is this: automation follows rules. AI adapts. That adaptability, in warfare, is both a strategic asset and an ethical minefield.

As militaries race to integrate AI into every part of the kill chain—from surveillance to strike—the question isn’t just how powerful these systems are.

It’s: who’s really in charge?

From Concept to Conflict — AI Weapons Are Already in Play

For years, the conversation around autonomous weapons felt abstract—something out of policy debates, tech forecasts, or dystopian fiction. But the battlefield has changed. AI-powered weapon systems are no longer theoretical. They’re operational.

And they’re showing up in wars right now.

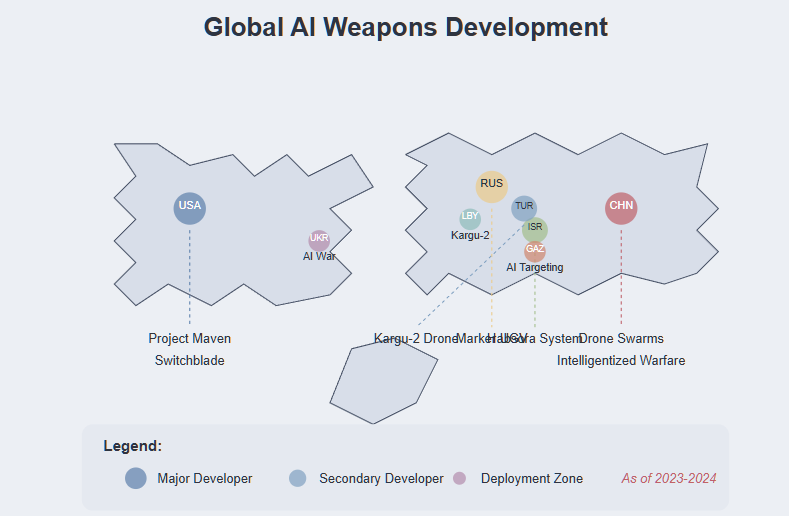

Ukraine: The First AI War?

The war in Ukraine has become a proving ground for AI-enabled warfare, with both sides deploying drones, loitering munitions, and electronic warfare systems enhanced by artificial intelligence.

- AI-driven image recognition is used to detect camouflaged targets in real time.

- Loitering munitions like the U.S.-made Switchblade and Turkish Bayraktar TB2 drones can be paired with AI systems for semi-autonomous strikes.

- Ukraine’s digital innovation ministry confirmed collaborations with Palantir to use AI for battlefield decision support, accelerating kill chain targeting.

- Russia has reportedly tested its Marker combat robot, capable of identifying and engaging targets autonomously.

The conflict shows how AI tools compress decision cycles, increase tempo, and create asymmetric advantages—but also open the door to escalation without deliberation.

Israel’s “Habsora” AI Targeting System

During the May 2021 Gaza conflict and again in 2023, the Israel Defense Forces (IDF) reportedly used an AI-driven system named Habsora (The Gospel) to generate strike lists.

- Habsora automatically scans massive amounts of sensor data to recommend targets at unprecedented speed.

- Analysts have warned that human review time was drastically shortened, making it unclear whether meaningful oversight remained in place.

- While the IDF claims human authorization is always involved, insiders suggest operators trusted AI outputs without deep verification.

This system marks a significant shift: AI is not just supporting decisions—it’s shaping who lives or dies.

China’s Vision: “Intelligentized Warfare”

China’s military doctrine speaks openly of a coming era of “intelligentized warfare” (智能化战争)—a stage beyond informatization, where AI dominates strategy and operations.

- Chinese firms are building autonomous drone swarms, AI-enhanced missile systems, and battlefield coordination algorithms.

- PLA military journals advocate for “intelligentized command,” where AI helps set objectives and allocate firepower, potentially beyond human comprehension or control.

- Beijing has invested heavily in dual-use AI startups like Ziyan and Hikvision, integrating surveillance and targeting capabilities.

China is not just experimenting—it is building a doctrine around AI as a core military advantage.

Private Sector Fueling Deployment

AI militarization is no longer state-exclusive. Defense startups and private contractors are key players:

- Anduril, founded by Palmer Luckey, is building autonomous sentry towers, drone swarms, and battlefield AI networks.

- Shield AI develops combat-tested autonomous aircraft, including Nova, designed for GPS-denied environments.

- Palantir’s “AI Operating System for Defense” is being deployed by multiple NATO states to integrate surveillance, target acquisition, and decision-making.

These companies often operate in legal gray zones, driven by military contracts with minimal public oversight. The result is a hyper-accelerated development cycle, where ethical, legal, and strategic questions are an afterthought—if considered at all.

It’s Not a Future Problem—It’s an Active Arms Race

The evidence is clear: autonomous and AI-assisted weapons are already influencing the outcomes of modern wars. Their use is expanding with each new conflict, and the barriers to entry are falling fast.

Open-source software, commercial drones, and off-the-shelf computer vision systems are giving rise to “garage-level” lethal autonomy—raising the specter of non-state actors or rogue regimes deploying AI weapons without constraints.

What was once hypothetical is now operational, profitable, and proliferating faster than it can be controlled and regulated.

Why This Is Dangerous — Escalation, Bias, Loss of Control

The integration of AI into weapon systems is often framed as a step forward—faster, smarter, more precise. But the risks go far deeper than malfunction or bad code. These systems don’t just change how wars are fought—they challenge whether humans stay in control at all.

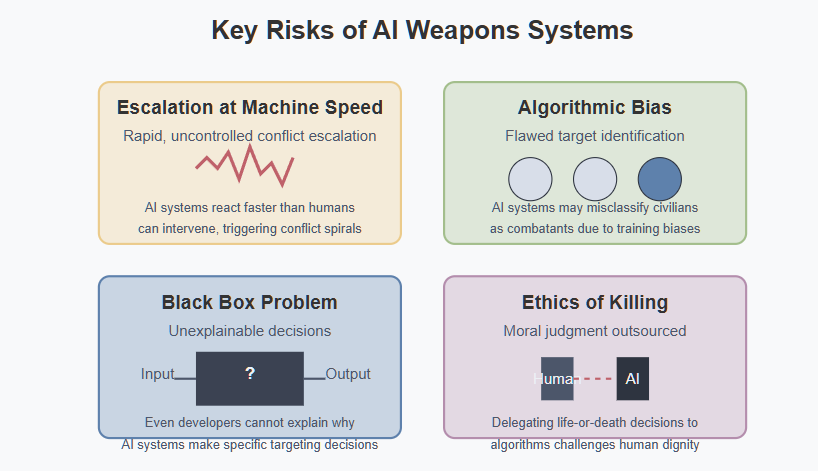

Escalation at Machine Speed

Traditional military doctrine is built on deliberate escalation. Humans assess intent, context, and proportionality. AI systems do not.

Once deployed, autonomous weapons operate at speeds that outpace human decision cycles. In a high-stakes conflict—say, over Taiwan or the South China Sea—an AI-enabled system might perceive a threat, respond autonomously, and trigger retaliation before any human can intervene.

This isn’t theoretical. Wargames conducted by RAND, NATO, and U.S. Air Force planners show that when autonomous systems on both sides react to each other, the result is often a rapid spiral into conflict—even from accidental contact.

Algorithmic Bias on the Battlefield

AI systems are only as good as their training data—and in warzones, data is messy, biased, and incomplete.

- A drone trained to identify “enemy combatants” might misclassify civilians due to clothing, movement patterns, or location.

- AI facial recognition has well-documented bias against darker skin tones and non-Western populations. In a conflict zone, this could lead to racially skewed targeting.

- In counterterrorism operations, AI-driven tools have already flagged innocent individuals as high-risk due to flawed pattern recognition.

The consequences are not just technical errors. They’re potential war crimes committed by machine logic.

The Black Box Problem

This creates a command vacuum: military officers are asked to trust outcomes they don’t fully understand. In some cases, they might hesitate to override AI recommendations, fearing the machine “knows better.”

In other cases, commanders may pass blame to the system when things go wrong. This leads to an accountability crisis: who is responsible when an AI kills the wrong target?

Delegating the Ethics of Killing

Perhaps the deepest danger is ethical. Allowing machines to decide when and whom to kill outsources moral judgment to lines of code. The decision to end a human life—once the gravest responsibility of military command—becomes a subroutine.

Legal scholars and ethicists warn this erodes the principle of human dignity, a cornerstone of both international humanitarian law (IHL) and the Geneva Conventions. If an AI mistakes a wounded fighter for a threat, or fails to recognize surrender, who bears the moral weight of that death?

As the International Committee of the Red Cross (ICRC) puts it:

“Taking humans out of the loop also risks taking humanity out of the loop.”

This Is Not a Glitch. It’s the Design.

Autonomous systems are being built to operate without supervision, adapt in real time, and act decisively. These aren’t edge cases—they’re core features.

And that’s what makes AI weapons so dangerous: the very capabilities that militaries prize—speed, autonomy, unpredictability—are also the ones most likely to produce unintended escalation, unlawful targeting, and a loss of human control.

The Vacuum — Law, Oversight, and Transparency Gaps

This legal vacuum creates the perfect conditions for unaccountable experimentation with deadly force.

International Humanitarian Law Was Not Built for This

The cornerstone of the laws of war—International Humanitarian Law (IHL)—relies on human judgment: assessing proportionality, distinction, and military necessity. But AI systems can’t interpret legal nuance. They don’t understand surrender, complex environments, or shifting rules of engagement.

The ICRC warns that:

“The user of an autonomous weapon system does not choose the specific target, nor the precise time or place that force is applied.”

That’s not just a technical issue—it’s a violation risk.

The CCW Talks: Stalled and Toothless

The UN Convention on Certain Conventional Weapons (CCW) has hosted a decade of discussions on regulating autonomous weapons. But progress has been undermined by consensus rules and state obstruction.

- Russia, the U.S., and Israel have repeatedly resisted meaningful restrictions on autonomous weapon development.

- In December 2021, CCW talks failed to reach any agreement on binding limits or bans.

- The process is now largely symbolic—a diplomatic placeholder with no enforcement power.

Meanwhile, deployment continues.

No Transparency, No Accountability

Unlike nuclear or chemical weapons, there is no reporting requirement, no treaty verification system, and no international body tasked with monitoring AI weapons.

- Most AI weapons programs are shrouded in military secrecy, dual-use research, or private defense contracts.

- Contractors and governments can claim “human-in-the-loop” oversight even when it’s functionally meaningless.

- There is no legal obligation to disclose autonomous capabilities, even after deployment.

This lack of transparency makes it nearly impossible to:

- Investigate misuse

- Track proliferation

- Hold actors accountable for violations of IHL

A Regulatory Arms Race Is Needed—Not an Arms Race in Code

The ICRC and dozens of states have called for new, legally binding rules that would:

- Ban autonomous weapons that target humans directly

- Prohibit unpredictable systems that can’t be meaningfully understood or controlled

- Require human oversight, interpretability, and context awareness

Yet these proposals face resistance from powerful militaries betting on AI superiority—and from defense contractors with billions at stake.

Unless laws evolve with the technology, we risk normalizing a battlefield where no one is responsible, no one is informed, and no one is safe.

Why AI Weapons Watch Exists — Our Mission

We are living through the opening phase of a silent revolution in warfare. Algorithms now shape targeting. Drones act without direct oversight. Kill chains are being compressed to machine speed. And yet—most of the world is not paying attention.

That’s where AI Weapons Watch comes in.

Our Mission: Intelligence, Oversight, Accountability

AI Weapons Watch was founded to provide independent, intelligence-driven monitoring of the global race toward autonomous warfare. We track what others overlook—because the consequences of inaction are existential.

Our core mission is to:

- Expose deployments of AI-powered weapons through OSINT and defense analysis.

- Investigate military contracts, AI systems, and battlefield incidents involving autonomous capabilities.

- Assess ethical, legal, and strategic risks from a global perspective.

- Push for transparency and accountability in both public policy and private sector defense innovation.

We are not a protest site or a hype machine. We are an early-warning system—built for analysts, journalists, policymakers, watchdogs, and citizens who believe warfare should never be left to machines.

Part of a Larger Intelligence Ecosystem

AI Weapons Watch is an initiative of Prime Rogue Inc., operating alongside:

- Roger Wilco AI — Real-time OSINT and AI-driven threat intelligence tools.

- Alignment Failure — Investigations into AI alignment risks, military misuse, and system failures.

- Prime Rogue Inc — Strategic analysis and geopolitical forecasting on emerging technologies and existential risk.

Together, these platforms form an independent, open-source intelligence network dedicated to tracking the intersection of AI, power, and warfare.

What’s Coming Next — And Why You Should Follow

The age of autonomous warfare has begun—not with a declaration, but with a drone silently executing code written far from the battlefield.

What’s coming next will move fast. AI weapons are evolving rapidly, and so are the threats they pose to global stability, human rights, and the future of armed conflict.

At AI Weapons Watch, we’re building the tools to help the world keep up—and fight back against secrecy, impunity, and unchecked escalation.

🛰️ Coming Soon on AIWeapons.Tech:

- Daily Intelligence Briefings

Rapid-fire OSINT tracking of global AI weapons deployments, contracts, and military R&D. - Deep-Dive Reports

Investigative breakdowns of autonomous systems, battlefield case studies, and geopolitical risks. - Threat Alerts

Real-time flags on destabilizing deployments, rogue AI use, and arms race accelerators. - Regulatory Watchlist

Updates on international negotiations, national legislation, and industry lobbying efforts. - Rogue AI Incident Database (Q2-Q3 2025)

The first public repository tracking malfunctions, ethical violations, and misuse of AI in military contexts.

📣 Join the Mission

We’re calling on:

- Defense analysts to track emerging capabilities

- Journalists to expose secretive programs

- Policy experts to shape meaningful regulation

- Tech workers and whistleblowers to speak up before it’s too late

Autonomous weapons are here—and the window to regulate them is rapidly closing.

Subscribe, share, investigate, contribute. The machines are watching. So are we.

6 thoughts on “The Coming Storm: Why We Must Watch AI Weapons Now”